Manage Multithreading

In a multi-threading program, the order in which the actions were performed was different each time. This is because operating system decides when a thread runs, and the decisions are made based on the workload. When asynchronous solutions are designed with multi-threading one should consider the uncertainty about the timings of thread activity does not affect the application and results produced.

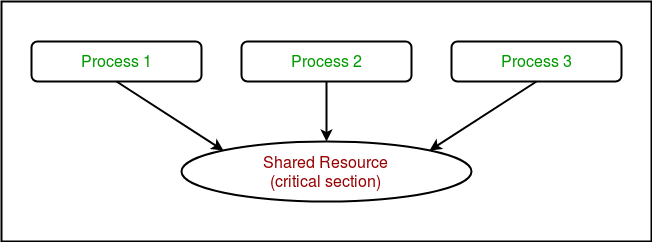

Resource Synchronization

When an application is spread over several asynchronous tasks, it becomes impossible to predict the sequencing and timing of individual actions. An application has to be designed with the understanding that any action may be interrupted in a way that has the potential to damage your application.

Above program shows a simple application which tries to add up the numbers in an array. The array size is very large (500,000,000). Iterating over this large array in a single task to calculate the sum takes a while. This program can be modified to use multiple processors by creating tasks. We can create tasks that calculates the sum over a small portion of this large array and at the end we can add the sum returned by all tasks and return the total sum of the array. Below program creates a number of tasks, each of which runs method AddRangeOfValues which adds the contents of a particular range of arrays to a total value. The first task will add the values in the elements from 0 to 999, the second task will do the same from 1000 to 1999 and so on up to the array.

However, if you execute the above two programs the outputs produced by them do not match. This is because of resource synchronization problem in the code above. Though there weren’t many updates to the shared variable sharedTotal , the problem is caused by the way in which all of the tasks interact over the same shared value. Consider the following sequence of events:

1) When task number 1 was performing an update to the sharedTotal. It fetches the variable from CPU and adds the contents of the array element to the sharedTotal. But Just CPU is about to write the value back into memory, the operating system stops task1 and switches to task2

2) Task2 also wants to update the sharedTotal. It fetches the value adds the element and pushes the value back to memory. At this point the operating system might switch back to Task1.

3) Task number 1 writes the sharedTotal value it was working on from the CPU back to its memory. This means the update performed by Task 2 is lost.

This is called race condition. There is a race between two threads and the behavior of the program depends on which threads first get to sharedTotal variable. It’s impossible to predict the output of such programs as it might work fine on some computers and then fail on any machine which has smaller or larger number of processors.

Locks

A program can use locking to ensure the given action is atomic. Atomic actions are performed to completion, so they cannot be interrupted. Access to the atomic action is controlled by a locking object. This can be considered to the keys of the restroom operated by the restaurant. To get access to the restroom you ask cashier for the key. You can then go and use the restroom and once finished handover the keys back to the cashier. If the restroom is in use when you request the key you must wait until the person in front of you requests the key, so you can then go and use it.

Above program shows the creation of the locking object. The object is called sharedTotalLock and it controls access to the statement that updates the value of the shared total. The lock statement is followed by a statement or block of code that is performed in atomic manner, so it will not be possible for a task to interrupt the code protected by the lock. The above program will give the same output which we expect to return as a single task doing all the work.

However above program removes the benefit of multi-tasking and takes more time to execute, this is because the tasks are not executing in parallel anymore. Most of the time tasks are in the queue waiting for access to the shared total value. Adding a lock solved the problem of the contention, but it has also stopped the tasks from executing in parallel, because they all are waiting for access to a variable, they all need to use.

Above program solves our problem. Rather than updating the shared total every time it adds a new element into the array. This version of the method only updates the shared total once. So, there is now a thousandth of the amount of use of shared variables and the program performs a lot better.

When you create a parallel version of operation you need to be mindful of potential value corruption by the use of shared variables and the impact of locking to prevent corruption. Also keep in mind that when a task running the code inside a lock is able to block other tasks similar to the person inside the restroom taking a long time will cause a long queue of people waiting to use it. Code in a lock should be as short as possible and should not contain any actions that might take a while to complete. For example, a program should never do Input/output operations during a locked block of code.

#Day2Of100DaysOfCode

GitHub:Locks

Comments